Many organizations are eager to adopt Generative AI (GenAI) for mission-critical systems, but it’s vital to first assess if you have the fundamental building blocks to get it going. Here’s a roadmap from the Cloud Kinetics data team you can use to empower your data teams and to build the foundation for a GenAI-powered data strategy.

As per a Gartner report, over 30% of GenAI projects will be dropped by 2026 due to poor data quality, high costs or unclear business value.

Your 4-step blueprint for GenAI success

1. Assess your current data capabilities and needs

Use a data & analytics maturity model to understand your organization’s maturity level – this stock taking is crucial to the success of your data strategy.

- Are you at an aspiration stage, where you will focus on building a strong data foundation and basic analytics capabilities?

- Or have you progressed to having the capability, where you can prioritize building a data science team and advanced analytics skills?

- Or have your team reached a point of competency, where you are ready to start exploring GenAI applications?

A good data and analytics strategy starts with a clear vision. Gartner

In addition to your organization’s maturity level, evaluate your team’s skills and knowledge in AI and machine learning. Identify any skill gaps that need to be addressed through training or hiring. Consider the availability of specialized AI talent within your organization or the potential to partner with external experts. By understanding your team’s capabilities, you can create a roadmap for skill development, allowing you to focus on immediate needs while planning for future expansion plans.

2. Build a robust modern data foundation

One of the first steps in harnessing GenAI is to break down data silos within your organization. Often, crucial data is scattered across multiple platforms, making it difficult to get a unified view. Collecting, integrating and processing this data into a single, cohesive platform is essential for effective AI application.

Think of a retailer with customer data locked in outdated systems. By consolidating this data, they can unlock powerful insights and deliver personalized customer experiences.

Once integrated, it’s vital to ensure the data is clean, well-governed and secure. Poor data quality can lead to flawed AI outputs, while weak governance and security can expose your organization to risks. Building a modern data foundation with these principles in mind will lay the groundwork for reliable and impactful AI applications.

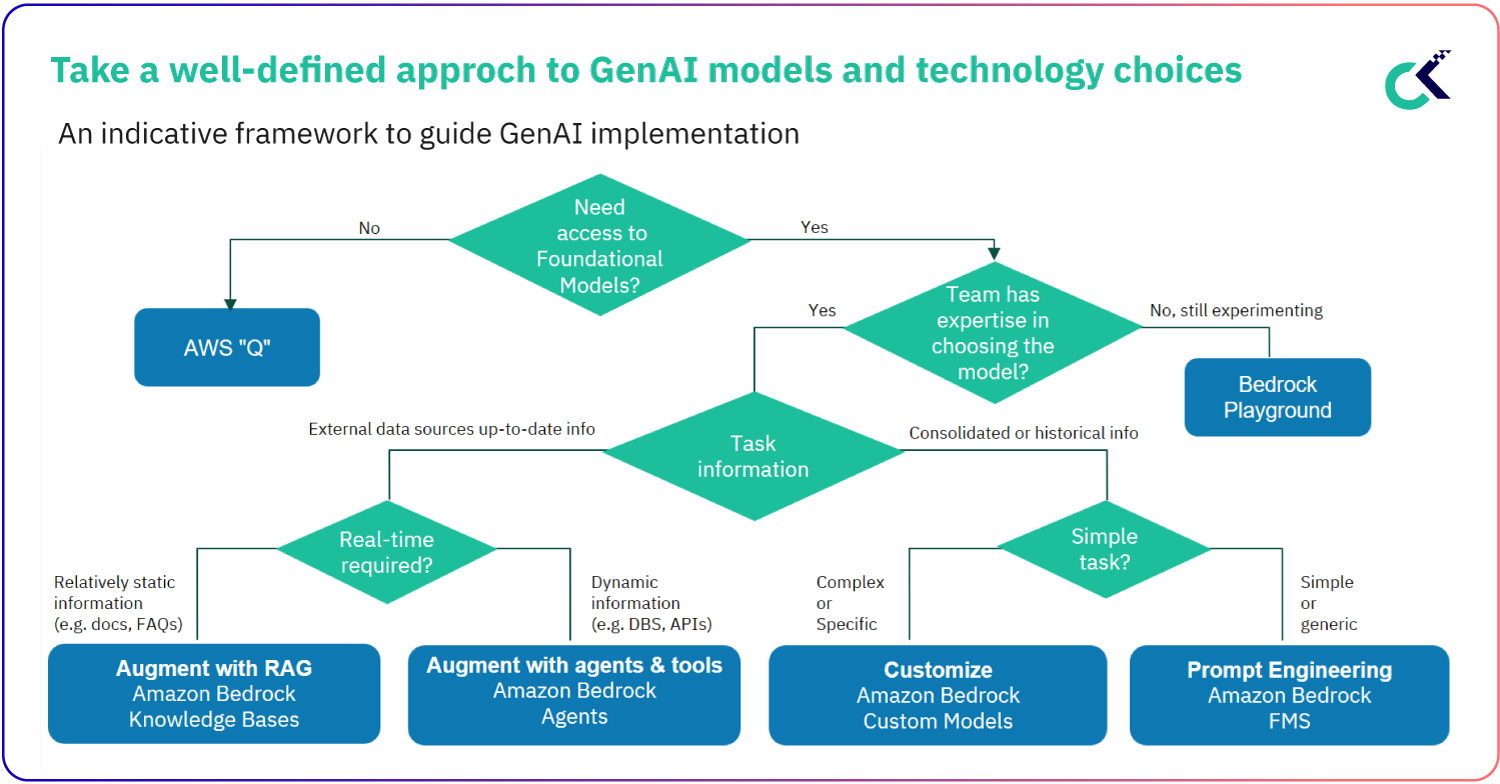

3. Make the right technology and model choices for your GenAI & data needs

Choosing the right technology and AI models is crucial for project success. Consider factors like your team’s expertise, available data and cost. If your team is comfortable with open-source models like Hugging Face or GPT, they offer flexibility but require more maintenance. Managed models like Amazon Bedrock or SageMaker are easier to implement with less upkeep but offer less customization.

Balancing these factors is key to a successful AI implementation. Opt for solutions that your team can manage effectively, that leverage your available data, and that fit within your budget. A well-thought-out choice here can save you time and money in the long run while ensuring robust performance.

Remember, AI performance is not just about speed and processing power; it is about delivering these results without ballooning costs. Proprietary systems can inflate total cost of ownership (TCO) with specialized hardware, licensing fees, and other hidden costs that make scaling more expensive in the long term.

One of the common misconceptions is that all AI workloads must run on expensive GPUs, which, while powerful, are not always the most efficient or necessary option for every type of task.

When selecting a model, evaluate accuracy, cost, latency and scalability. Balancing these factors ensures the chosen solution aligns with your team’s capabilities, available resources, and long-term goals.

The AI universe is constantly evolving, and needs solutions that are not just designed for today, but for tomorrow. Proprietary systems often lock clients into specific technologies, making it costly and difficult to upgrade as AI capabilities advance. Look for open, future-proof AI systems that adapt to new technologies and workloads. This long-term flexibility gives you the confidence that their AI investments will remain viable, no matter how your needs evolve.

4. Use tools and accelerators to advance your AI & GenAI dev lifecycle

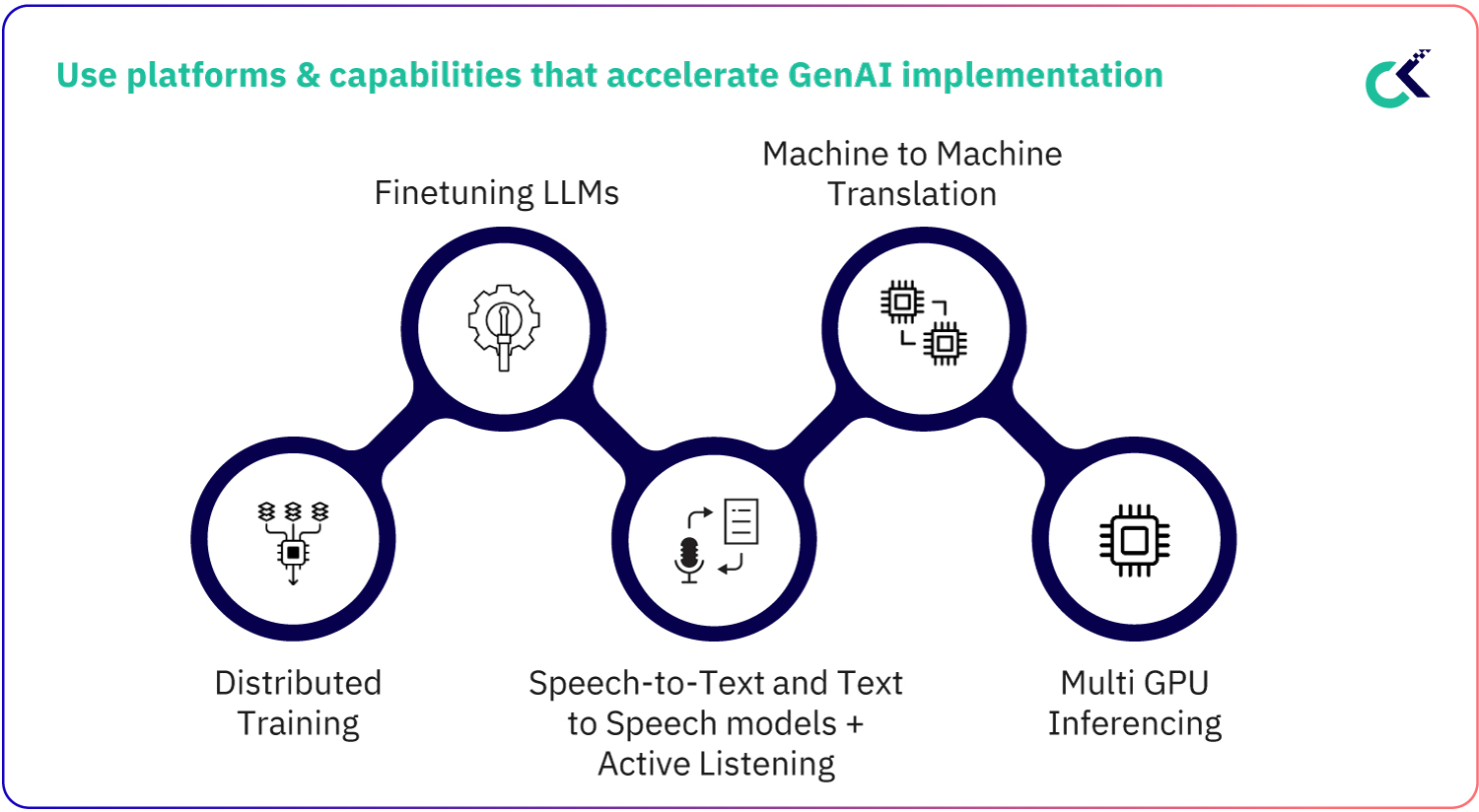

Don’t reinvent the wheel – use available cutting-edge tools and platforms to accelerate your GenAI projects. The goal is to streamline the process from data collection to actionable insights. Utilizing accelerators and pre-built tools can help you quickly move from concept to deployment, ensuring that your AI applications deliver value rapidly and efficiently.

If you are working on refining large language models (LLMs), platforms that offer distributed training and fine-tuning can significantly reduce the time from data to insights. These tools not only speed up development but also ensure that your AI models are optimized for performance and cost.

- To optimize AI pipelines, organizations can turn, for example, to the latest Intel Xeon processors with Intel Advanced Matrix Extensions (Intel AMX), a built-in AI accelerator. As part of Intel® AI Engines, Intel AMX was designed to balance inference, the most prominent use case for a CPU in AI applications, with more capabilities for training.

- To optimize Retrieval-Augmented Generation (RAG) pipelines, Langchain is a powerful tool for orchestrating workflows across different data sources. It connects language models with databases, APIs, and cloud storage, enabling seamless chaining of tasks like data retrieval and prompt transformation. This flexibility makes it easy to build scalable RAG systems that can quickly process and augment responses with relevant information.

- For managing embeddings, Amazon Bedrock Knowledgebase can offer a managed vector store solution that integrates with various embedding models. It provides scalable, efficient storage for embeddings, allowing fast retrieval of contextually relevant information from multiple data sources.

- By combining Langchain for orchestration and Bedrock for vector storage, you can build a highly efficient, adaptable RAG pipeline that accelerates insights and enhances AI applications.

By following these steps, you will be well on your way to implementing GenAI in a way that is both strategic and sustainable, driving meaningful outcomes for your organization.